By DSG Group on February 29, 2016

If you were to ask any technical professional working within the digital humanities today about the state of innovation or development present in the field you would probably hear many similar responses. I’m sure most would tell you that it is an exciting and interesting time to be involved with the humanities as a myriad of new projects are undertaken, tools are developed, and new applications for those tools are found. It seems like every day there are advances being made in areas such as web applications, cloud services, virtual reality, data analysis, and infrastructure technologies which have the potential to push the DH envelope even further.

However, if working with technology has taught me anything, it is that it can be all too easy to forget that behind every program, screen, keyboard, or phone there is a person with a wealth of life experience, talent, and skills that is using that device as a means to a final goal. In my opinion, any of these new products can only gain value from its ability to bring different people (whomever and wherever they may be) together for the advancement of our collective knowledge, communication, and mutual achievement.

In further keeping with that mindset I would like to highlight some of the technology that is being used to bring two worlds together: the hearing and the Deaf*. For a large part of the 20th century any technological developments that were made regarding the Deaf were simply for increased accessibility to a hearing world; they were a very ‘one way street’ set of tools. In 1964, teletypewriter systems were invented and finally provided access to a phone infrastructure that the hearing had been using for decades already. Captioning for television and films, while being developed in the 1970’s, was not in widespread use until the 1990’s when the Television Decoder Circuitry Act made it mandatory for screens 13 inches or larger to support closed captioned television transmissions. The recently passed 21st Century Communications and Video Accessibility Act (CVAA) expanded on previous legislation to bring it into alignment with current technology. Along with the increased legal protections, there have also been many developments which focus on the goals of standard communication and collaboration, as I mentioned previously; many of which serve as an equalizer between the deaf and hearing communities.

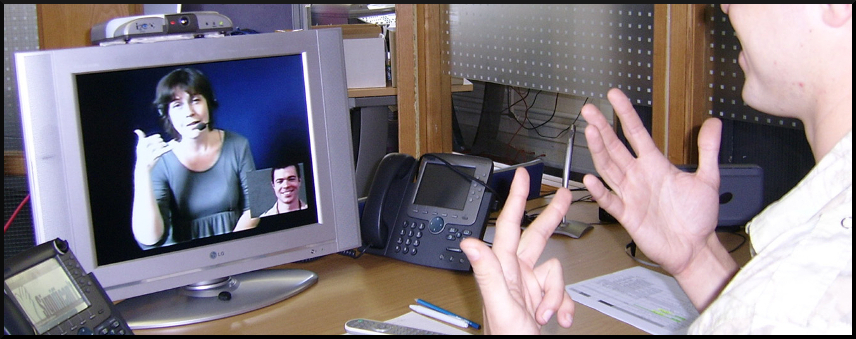

Video Relay Services (VRS) are one piece of technology that has helped remove barriers of communication between the Deaf and hearing. Using a phone, tablet, webcam, or other type of hardware a video call is set up between the signer and an interpreter, who in turn places an audio phone call to the intended recipient. Using this method it is very easy for natural communication to take place from anywhere in the world. While the concepts for video phone technology date back as far as the 1960’s, interpretation services were not integrated into the system until the early 2000’s, and their proliferation in the United States has continued to improve ever since.

There are several companies contributing to the development of VRS systems. While each of the following businesses provide relay services, each has taken their own approach and added products or features to enhance the user experience. Sorenson Communications has focused on creating an array of apps for PC, Mac, and mobile platforms to allow VRS calling 24 hours a day, wherever an internet connection is available. They also produce their own library of video content tailored specifically to a Deaf audience. Convo Communications, a wholly Deaf owned and operated business, is the first of its kind to offer an effective visual emergency and public notification system. Convo has also worked with the Philips Hue lighting system to produce an application that uses variations in lighting colors and patterns to alert Deaf users to auditory signals they might otherwise miss. Not only is this a fantastic addition to the VRS calling model, it opens up the possibilities for a multi-sensory approach to environmental design in both the home and workplace. Purple Communications has created their own piece of VRS hardware that combines the aforementioned VRS functionality with a suite of other apps like YouTube, YellowPages, weather, and a library of on demand captioned video to provide not only communication but entertainment and information options.

Image credit: MotionSavvy LLC via http://www.motionsavvy.com/

Another area of development involves the interpretation of sign language for a hearing audience and voice recognition of spoken speech to further integrate the two communities. MotionSavvy grew out of research being done by students from the Rochester Institute of Technology’s National Technical Institute for the Deaf and is developing a new set of software tools designed to enable more fluid and seamless communication by acting both as a digital interpreter for signed languages and voice recognition for speech. By using a tablet device and cameras developed by Leap Motion that specifically track and interpret hand movement, MotionSavvy’s UNI software can recognize signed languages and synthesize speech for a hearing participant. Then, for the other direction, MotionSavvy is using Nuance Communications’ well known speech-to-text software to allow for reading of spoken dialog. While not meant to replace human interpreters, the UNI systems aim to present another option for communication and also to fill a void when an interpreter is not available.

There are a few other projects that share the similar goal of interpreting signed languages for a hearing listener but take a different approach to the task; they aim to do this through the use of a pair of gloves embedded with sensors. In 2012, Microsoft held its annual Imagine Cup and a small team of students from Ukraine took home first prize with their presentation of the EnableTalk sign language glove. When worn by a signer, a collection of gestural and contact sensors would translate the motion of sign language into synthesized speech. Research into this medium is also being conducted at Goldsmiths University of London by Hadeel Ayoub, a student who aims to integrate it with wireless and mobile phone technology to allow for a wide array of communication and translation functions. While both of these devices are still in the early stages, the continuing miniaturization of technology makes this application’s development very promising and interesting to follow.

Finally, I would like to touch on two organizations that, while not explicitly designing their own technology, are using it to share experiences, open lines of dialog, and provide new perspectives on what communication really means. The Deaf Culture Centre in Toronto, Canada not only documents the many contributions and achievements of deaf individuals, but serves as a convergence point between the deaf and hearing communities. They use art, technology, and an educational curriculum to bring these two groups together. The Israel Children’s Museum hosts an exhibit called “Invitation To Silence” which uses various forms of technology to allow participants to explore how non-verbal communication is naturally expressed and understood by everyone. These lessons are invaluable in breaking down the assumed barriers between a signed and spoken language and show how it is not the method of communication that is important, but the messages that are conveyed.

It is my sincere hope that the tools mentioned in this article, as well as any technology professional in the DH field, continue to strive towards the goals of unity and collaboration regardless of who or where it may arise. It truly is a great time to be involved in the humanities, since there are countless new projects to undertake and research to explore. However, we must all work together to see it through. As the DH name implies, it is important to remember that with every digital advancement there will always be a human side to the equation that is necessary for any real progress to be made.

*For an explanation of the difference between ‘deaf’ and ‘Deaf’, see https://nad.org/issues/american-sign-language/community-and-culture-faq.

Featured image by SignVideo, London, U.K. (Significan’t SignVideo , London, U.K.) [GFDL (http://www.gnu.org/copyleft/fdl.html) or CC BY-SA 4.0-3.0-2.5-2.0-1.0 (http://creativecommons.org/licenses/by-sa/4.0-3.0-2.5-2.0-1.0)], via Wikimedia Commons

Resources:

- Television Decoder Circuitry Act: https://nad.org/issues/civil-rights/television-decoder-circuitry-act

- 21st Century Communications and Video Accessibility Act (CVAA): https://www.fcc.gov/consumers/guides/21st-century-communications-and-video-accessibility-act-cvaa

- Sorenson Communications: http://www.sorenson.com/

- Convo Communications: https://www.convorelay.com/index.html

- Purple Communications: http://www.purple.us/

- MotionSavvy: http://www.motionsavvy.com/

- EnableTalk Project: http://enabletalk.com/

- Hadeel Ayoub’s sign language glove: http://www.zdnet.com/article/students-smart-glove-translates-sign-language-into-speech/

- Deaf Culture Centre in Toronto, Canada: http://www.deafculturecentre.ca/Public/Default.aspx?I=7&n=About+Us

- ‘Invitation to Silence’ at the Israel Children’s Museum: http://www.childrensmuseum.org.il/eng/template/?cid=39

Chris Pompeo is a Departmental Technology Analyst at the UCLA Center for Digital Humanities where he and the other DTAs provide support for the various needs of the Humanities faculty and staff. He received his MS in Information Technology Leadership from La Salle University in Philadelphia, PA and joined HumTech in 2014. Chris is fascinated by seeing science fiction become science reality and believes we live in a time where such things happen.

Benjamin Lewis is the first Deaf lecturer to work on the ASL program at University of California, Los Angeles and is a professor of both ASL and Deaf History. Fluent in ASL, Japanese Sign Language (JSL), and New Zealand Sign Language (NZSL), he is fascinated by watching how not only Deaf people, but also humans in general use their hands to communicate with others by incorporating signs, gestures, and visual movements.